Linear Regression Using Python

1. Theory

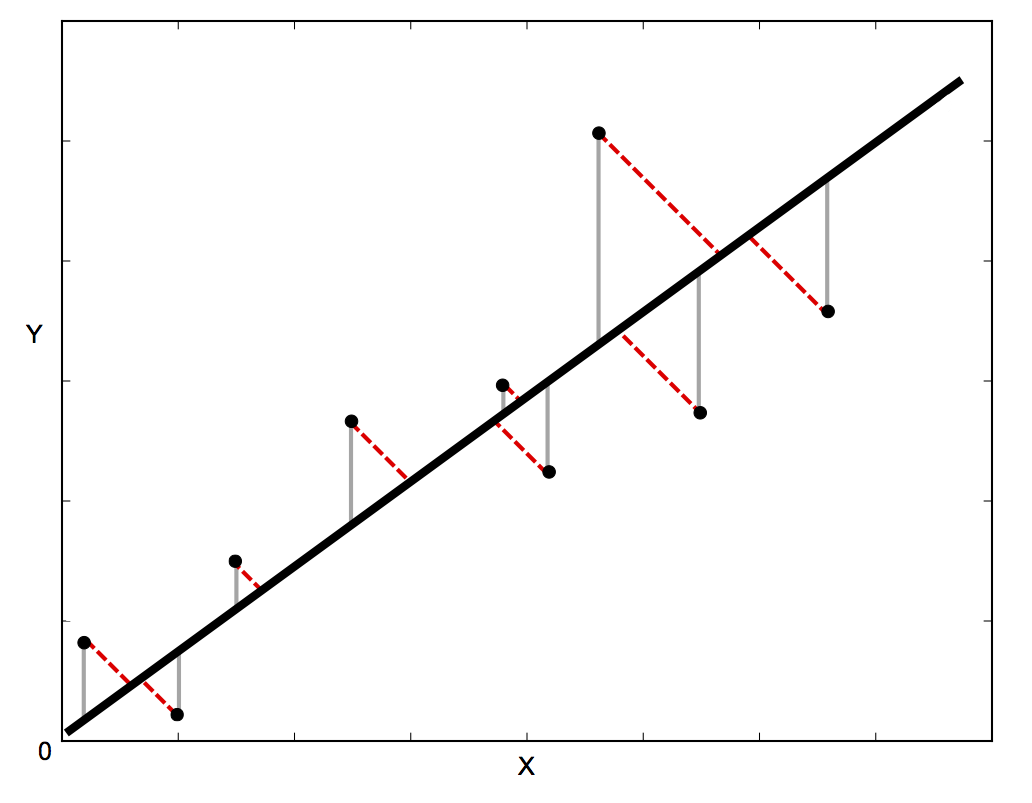

Suppose that you want to fit a set data points , where , to a straight line, . The process of determining the best-fit line is called linear regression. This involves choosing the parameters and to minimize the sum of the squares of the differences between the data points and the linear function. How the difference are defined varies. If there are only uncertainties in the y direction, then the differences in the vertical direction (the gray lines in the figure below) are used. If there are uncertainties in both the and directions, the orthogonal (perpendicular) distances from the line (the dotted red lines in the figure below) are used.

Image from http://blog.rtwilson.com/orthogonal-distance-regression-in-python/

Image from http://blog.rtwilson.com/orthogonal-distance-regression-in-python/

For the case where there are only uncertainties in the y direction, there is an analytical solution to the problem. If the uncertainty in is , then the difference squared for each point is weighted by . If there are no uncertainties, each point is given an equal weight of one. The function to be minimized with respect to variations in the parameters, and , is

The lab reference manual gives equations for the best values, but those don't take the uncertainties into account. The analytical solutions for the best-fit parameters that minimize (see pp. 181-189 of An Introduction to Error Analysis: The Study of Uncertainties in Physical Measurements by John R. Taylor, for example) are

and The uncertainties in the parameters are

and All of the sums in the four previous equations are over from 1 to .

For the case where there are uncertainties in both and , there is no analytical solution. The complex method used is called orthogonal distance regression (ODR).

2. Implementation in Python

The linear_fit function that performs these calculations is defined in the file "fitting.py". The file must be located in the same directory as the Python program using it. If there are no uncertainties or only uncertainties in the direction, the analytical expressions above are used. If there are uncertainties in both the and directions, the scipy.odr module is used.

(a) Uncertainty in Direction

An example of performing a linear fit with uncertainties in the direction is shown below. The first command imports the function. Arrays containing the data points ( and ) are sent to the function. If only one array of uncertainties (called in the example) is sent, they are assumed to be in the direction. In the example, the array function (from the pylab library) is used to turn lists into arrays. It is also possible to read data from a file. The fitting function returns the best-fit parameters (called and in the example), their uncertainties (called and in the example), the reduced chi squared, and the degrees of freedom (called and in the example). The last two quantities are defined in the next section.

(b) Uncertainties in and Directions

An example of performing a linear fit with uncertainties in both the and direction is shown below. Arrays containing the data points ( and ) and their uncertainties ( and ) are sent to the function. Note the order of the uncertainties! The uncertainty in is optional, so it is second. This is also consistent with the errorbar function (see below). Note that some of the error bars don't show up because they are very small.

3. Intrepeting the Results

Plotting data with error bars and a best-fit line together gives a rough idea of whether or not the fit is good. If the line passes within most of the error bars, the fit is probably reasonably good. (In advanced lab, you will also use the reduced chi square will also be used to judge the goodness of the fit.)

Additional Documentation

More information is available at https://docs.scipy.org/doc/scipy/reference/odr.html